Unconstrained Fashion Landmark Detection via Hierarchical Recurrent Transformer Networks

Abstract

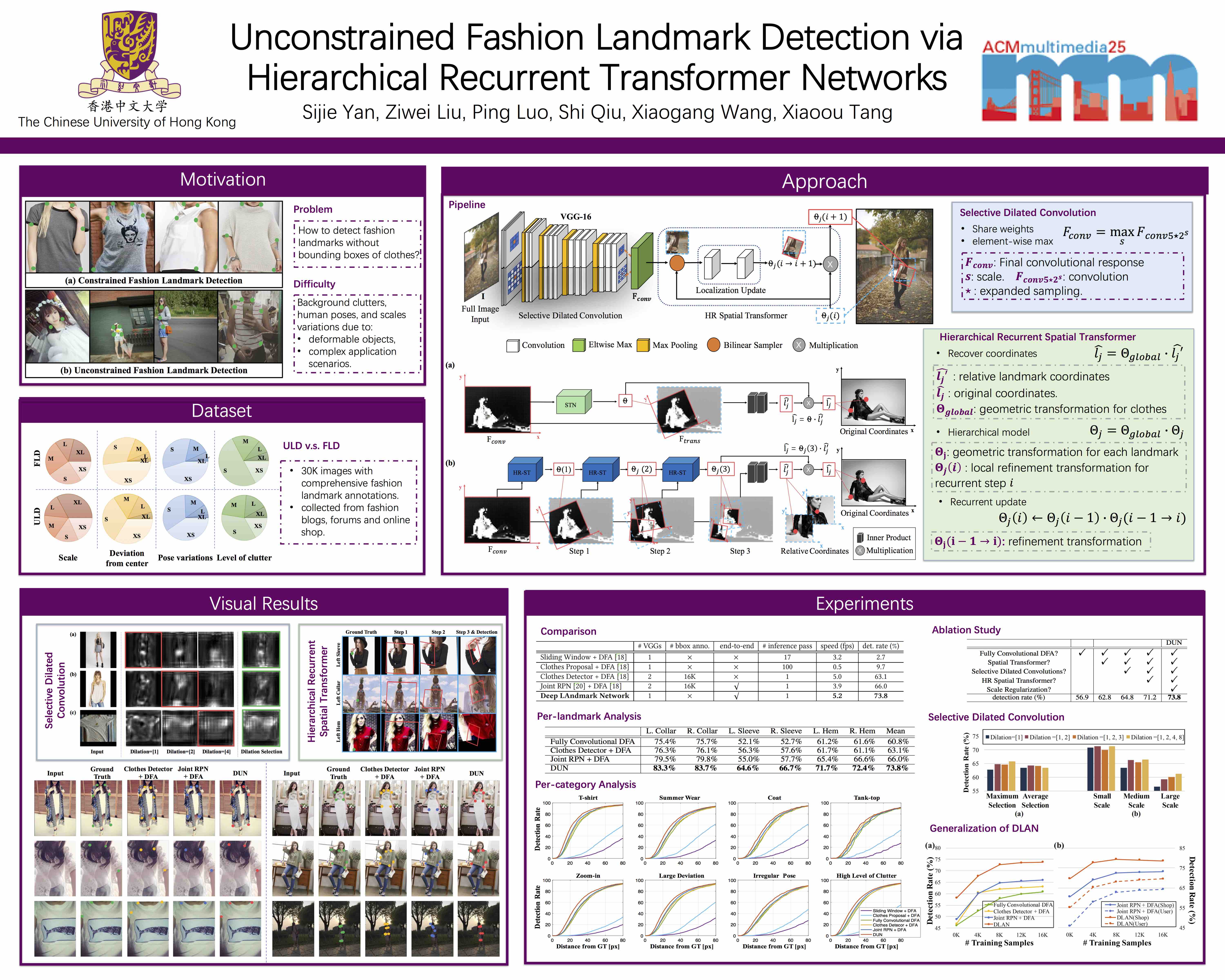

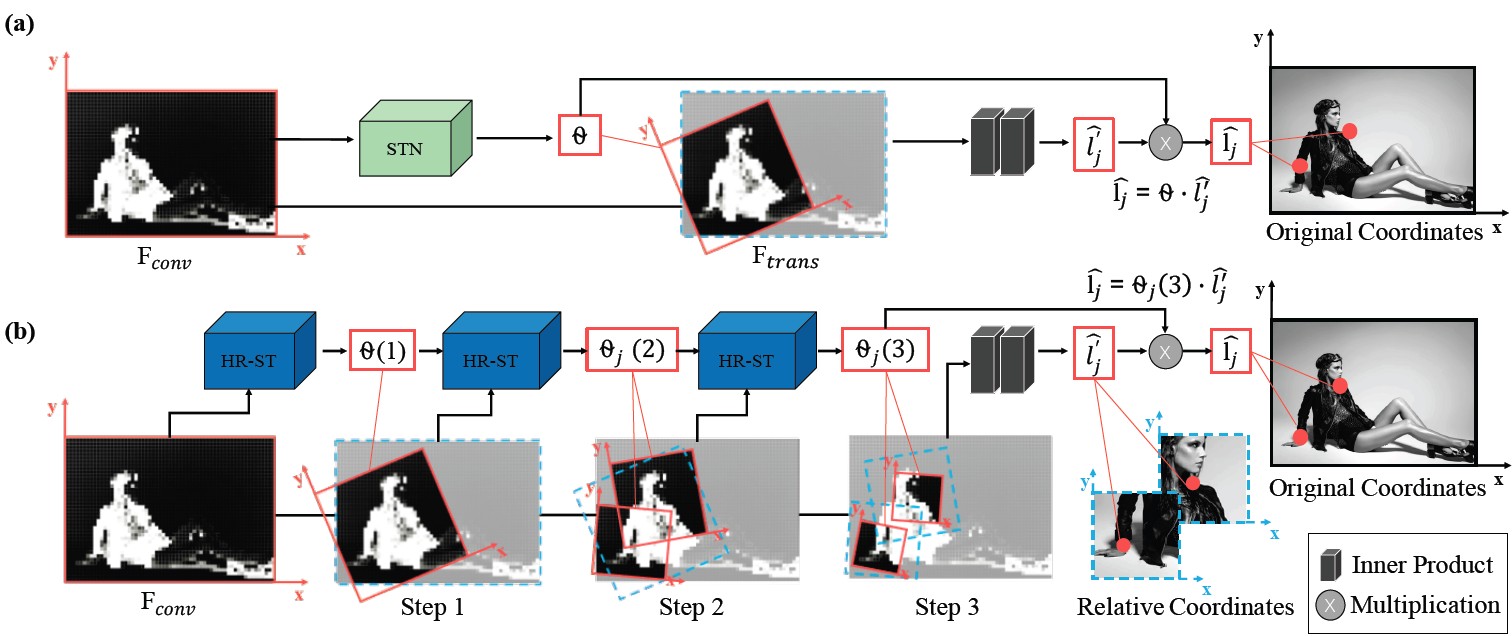

Fashion landmarks are functional key points defined on clothes, such as corners of neckline, hemline, and cuff. They have been recently introduced as an effective visual representation for fashion image understanding. However, detecting fashion landmarks are challenging due to background clutters, human poses, and scales. To remove the above variations, previous works usually assumed bounding boxes of clothes are provided in training and test as additional annotations, which are expensive to obtain and inapplicable in practice. This work addresses unconstrained fashion landmark detection, where clothing bounding boxes are not provided in both training and test. To this end, we present a novel Deep LAndmark Network (DLAN), where bounding boxes and landmarks are jointly estimated and trained iteratively in an end-to-end manner. DLAN contains two dedicated modules, including a Selective Dilated Convolution for handling scale discrepancies, and a Hierarchical Recurrent Spatial Transformer for handling background clutters. To evaluate DLAN, we present a large-scale fashion landmark dataset, namely Unconstrained Landmark Database (ULD), consisting of 30K images. Statistics show that ULD is more challenging than existing datasets in terms of image scales, background clutters, and human poses. Extensive experiments demonstrate the effectiveness of DLAN over the state-of-the-art methods. DLAN also exhibits excellent generalization across different clothing categories and modalities, making it extremely suitable for real-world fashion analysis.

Spotlight

Code and Models

Demo Video

Citation

@inproceedings{yan2017unconstrainedlandmarks,

author = {Sijie Yan, Ziwei Liu, Ping Luo, Shi Qiu, Xiaogang Wang, and Xiaoou Tang},

title = {Unconstrained Fashion Landmark Detection via Hierarchical Recurrent Transformer Networks},

booktitle = {ACM Multimedia (ACM MM)},

month = {October},

year = {2017}

}