DeepFashion: Powering Robust Clothes Recognition and Retrieval with Rich Annotations

Abstract

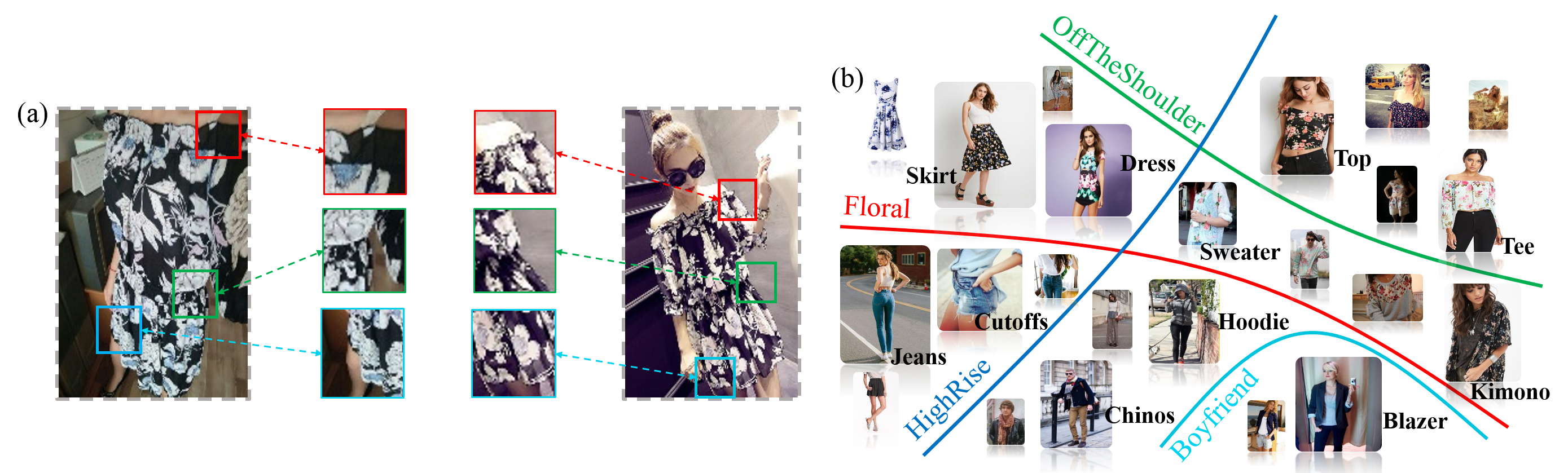

Recent advances in clothes recognition have been driven by the construction of clothes datasets. Existing datasets are limited in the amount of annotations and are difficult to cope with the various challenges in real-world applications. In this work, we introduce DeepFashion, a large-scale clothes dataset with comprehensive annotations. It contains over 800,000 images, which are richly annotated with massive attributes, clothing landmarks, and correspondence of images taken under different scenarios including store, street snapshot, and consumer. Such rich annotations enable the development of powerful algorithms in clothes recognition and facilitating future researches. To demonstrate the advantages of DeepFashion, we propose a new deep model, namely FashionNet, which learns clothing features by jointly predicting clothing attributes and landmarks. The estimated landmarks are then employed to pool or gate the learned features. It is optimized in an iterative manner. Extensive experiments demonstrate the effectiveness of FashionNet and the usefulness of DeepFashion.

Materials

Code and Models

Dataset

We contribute DeepFashion database, a large-scale clothes database, which has several appealing properties:

First, DeepFashion contains over 800,000 diverse fashion images ranging from well-posed shop images to unconstrained consumer photos.

Second, DeepFashion is annotated with rich information of clothing items. Each image in this dataset is labeled with 50 categories, 1,000 descriptive attributes, bounding box and clothing landmarks.

Third, DeepFashion contains over 300,000 cross-pose/cross-domain image pairs.

DeepFashion Dataset

Citation

@inproceedings{liu2016deepfashion,

author = {Liu, Ziwei and Luo, Ping and Qiu, Shi and Wang, Xiaogang and Tang, Xiaoou},

title = {DeepFashion: Powering Robust Clothes Recognition and Retrieval with Rich Annotations},

booktitle = {Proceedings of IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

month = June,

year = {2016}

}