Fast Burst Images Denoising

Abstract

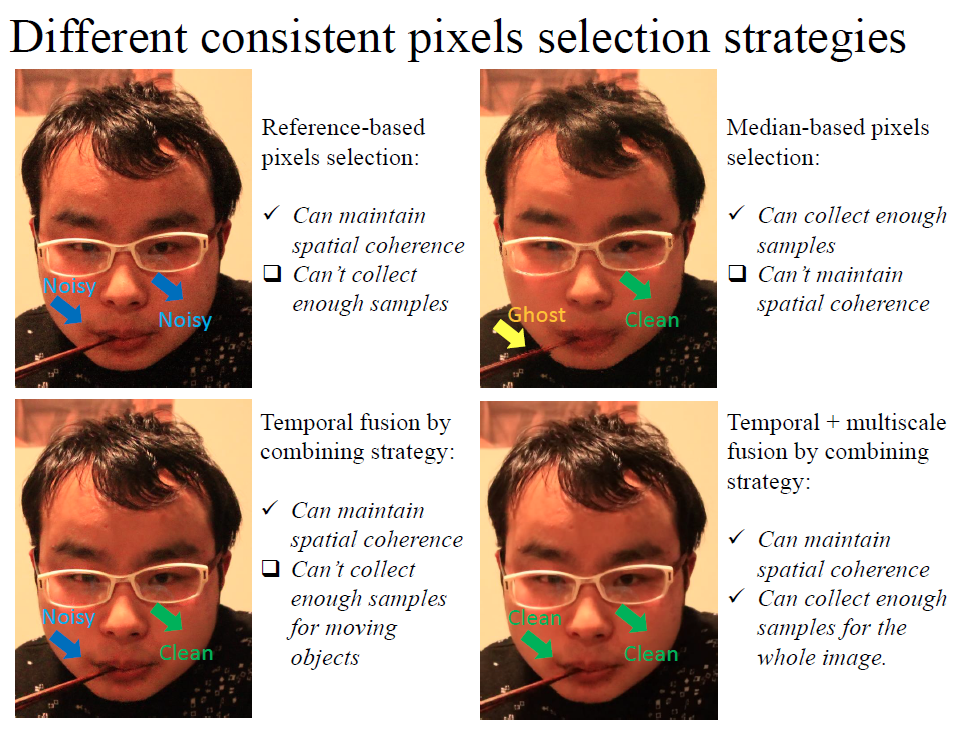

This paper presents a fast denoising method that produces a clean image from a burst of noisy images. We accelerate alignment of the images by introducing a lightweight camera motion representation called homography flow. The aligned images are then fused to create a denoised output with rapid per-pixel operations in temporal and spatial domains. To handle scene motion during the capture, a mechanism of selecting consistent pixels for temporal fusion is proposed to "synthesize" a clean, ghost-free image, which can largely reduce the computation of tracking motion between frames. Combined with these efficient solutions, our method runs several orders of magnitude faster than previous work, while the denoising quality is comparable. A smartphone prototype demonstrates that our method is practical and works well on a large variety of real examples.

Spotlight

Materials

Presentation

Fast Forward Video

Real-world Dataset

Noisy Bursts (NB) Dataset:

25 burst image sequences under various real-world scenarios

Each with 10 successive noisy images

Shot by 5 different smartphones

Noisy Bursts (NB) Dataset

Synthetic Dataset

Product Transfer

Windows BLINK App

Microsoft Selfie App

Citation

@article{liu2014burstdenoising,

author={Ziwei Liu, Lu Yuan, Xiaoou Tang, Matt Uyttendaele, and Jian Sun},

title={Fast Burst Images Denoising},

journal={ACM Transactions on Graphics (TOG)},

volume={33},

number={6},

year={2014},

publisher={ACM}

}